Automatic backups of Home Assistant in Docker: rsync and rclone

There are many ways to backup your Home Assistant instance, but today we will review a simple script that runs on your server and does local and remote backups.

Well, the core of this method is not just a script, but two very powerful and useful Linux command line utilities: rsync and rclone.

Rsync is a file-transferring and syncing utility. It allows copying huge amounts of data quickly by syncing only changes made in the source to a destination.

Rclone is a command-line cloud storage file manager. Will allow us to upload our backups to any cloud storage among an impressive list of supported services.

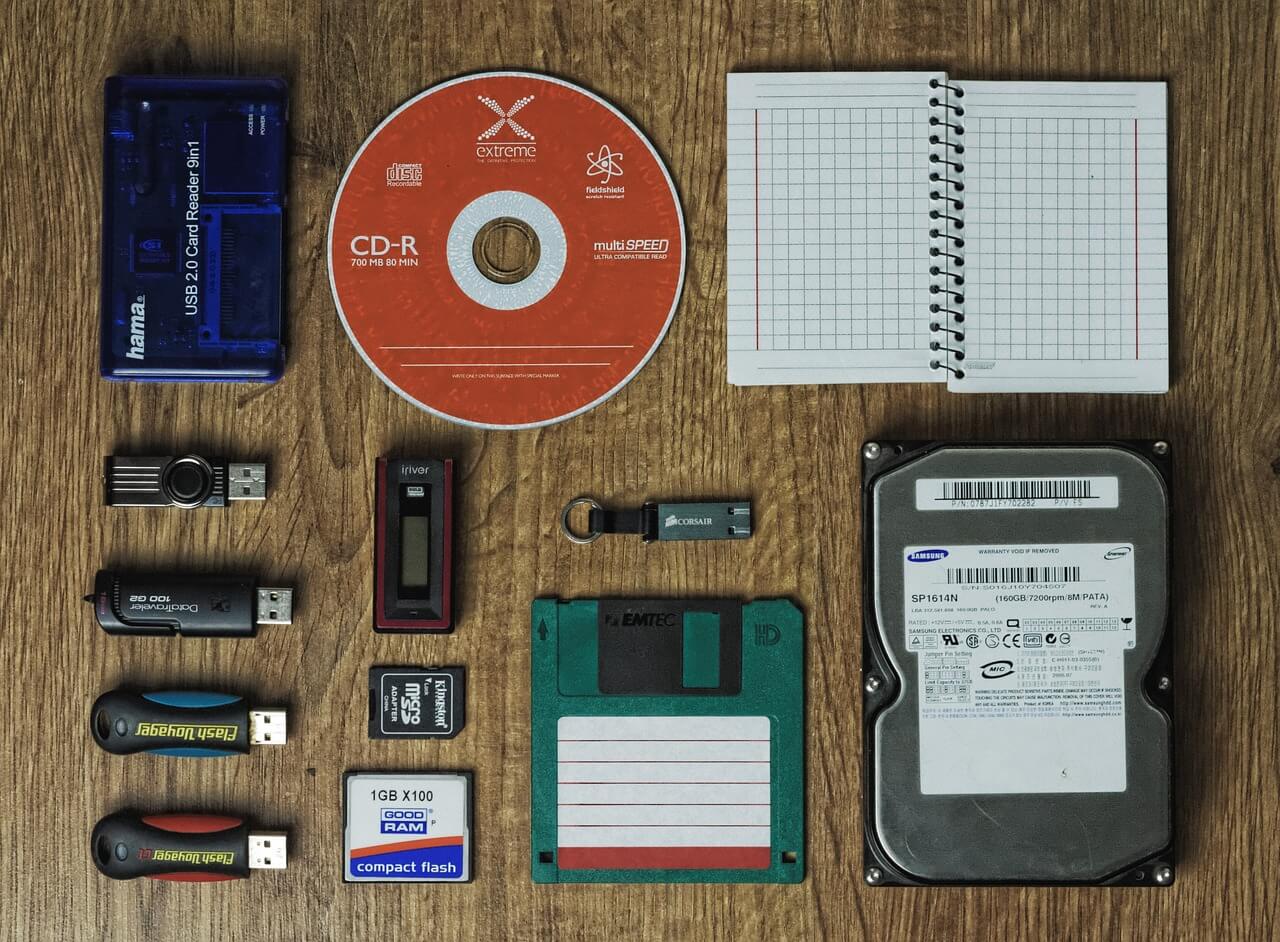

First of all, we need to decide what we need to backup. We know that Home Assistant stores all important data in files. As well as Zigbee2MQTT for example. So copying their corresponding configuration folders would be enough to restore previous functionality after a system failure.

Local backup

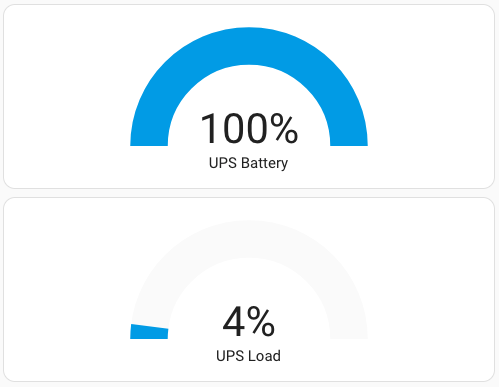

For local backup an external or just a second HDD/SSD could be used. You sure can use the same disk, but the meaning of the backups is not only to be able to restore your system after critical configuration issues made by you or some updated integration. A backup on a separate storage could save you in case of your primary disk failure.

So, to make a backup with rsync:

rsync -a /root/homeassistant /media/external1/backup/smart_home_stack/

The first run of this will copy /root/homeassistant directory into the /media/external1/backup/smart_home_stack/ directory. -a parameter preserves all file attributes, and symlinks and copies each directory inside /root/homeassistant recursively.

The second and further runs will copy only the difference in files and folders, so the execution would be fast. Moreover, the reverse copying will copy just the difference from your backup to a working directory, so just do a restore of a backup:

rsync -a /media/external1/backup/smart_home_stack/homeassistant /root/

Files in use and databases

While copying files that currently are in use by Home Assistant or any other software, rsync will just copy the state of a file the moment it gets to it. For most of the files used in Home Assistant, this is not critical, but for databases it is.

The database of Home Assistant is storing the entity’s history and stats. For me, it is not an issue to lose all state’s history during a backup restore. But if this is an issue for you, you’ll need to stop Docker containers before making their backups. For example, if you are using docker-compose, you could do it with:

docker compose stop

running this in a folder where your docker-compose.yaml is placed.

Cloud backup

Here goes Rclone. Rclone could be easily downloaded and installed on a bunch of platforms. For the configuration steps, you should follow the official documentation. For example, on Google Drive.

To make the uploading as fast as possible, we need to add our folder to an archive. Using zip, for example. On Debian and a lot of other Debian-based OSs, you could install it with:

apt install zip

Script

Now let’s just move to an example bash script you could execute to make a backup. Create a backup.sh file in the same folder where you have your docker-compose.yaml. Now. let’s go line by line.

If you care about the HA database and in case you are not using any third-party DB with Home Assistant, like MariaDB, you need to stop your Docker containers first. You also need to provide the path to your docker-compose.yaml to make it possible to run the script from any location. Also, it would be useful to add a delay after the container stop to allow all file writing to be finished:

docker compose -f /root/docker-compose.yaml stop sleep 5s

Then, copy configuration files of the containers, you want to backup:

rsync -a /root/esphome /media/external1/backup/smart_home_stack/ rsync -a /root/homeassistant /media/external1/backup/smart_home_stack/ rsync -a /root/mosquitto /media/external1/backup/smart_home_stack/ rsync -a /root/zigbee2mqtt /media/external1/backup/smart_home_stack/

We can start our containers after that to minimize downtime, as we copied all the necessary data:

docker compose -f /root/docker-compose.yaml start

Now /media/external1/backup/smart_home_stack/ folder stores all files and folders backed up.

Next is archiving. We could store every backup version separately on the could storage service of our choice. In this case, we need to declare a variable in our script to hold the current date and time:

dt=$(date '+%Y%m%d.%H%M%S')

In case, you want to store the latest backup only, just make it a constant:

dt="latest"

Now we need to change the working directory to a location where our local backups are stored.

cd /media/external1/backup

And archive our smart_home_stack using a variable, we created previously:

zip -rq smart_home_stack_$dt.zip ./smart_home_stack

-r will add all folders inside smart_home_stack, and q is for “quiet”, which means less console output during archiving.

Upload the archive to a cloud:

rclone copy ./smart_home_stack_$dt.zip gdrive:backups

Delete created archive:

rm -rf ./smart_home_stack_$dt.zip

And clean cloud storage for backups older than a month:

rclone delete gdrive:backups --min-age 1M

Here is a full backup.sh example:

docker compose -f /root/docker-compose.yaml stop sleep 5s rsync -a /root/esphome /media/external1/backup/smart_home_stack/ rsync -a /root/homeassistant /media/external1/backup/smart_home_stack/ rsync -a /root/mosquitto /media/external1/backup/smart_home_stack/ rsync -a /root/zigbee2mqtt /media/external1/backup/smart_home_stack/ docker compose -f /root/docker-compose.yaml start #dt=$(date '+%Y%m%d.%H%M%S') dt="latest" cd /media/external1/backup zip -rq smart_home_stack_$dt.zip ./smart_home_stack rclone copy ./smart_home_stack_$dt.zip gdrive:backups rm -rf ./smart_home_stack_$dt.zip rclone delete gdrive:backups --min-age 1M

Don’t forget to make your script executable:

chmod +x backup.sh

Automating

We can automate our script with cron. To edit your crontab file:

crontab -e

For example, to run a backup script every day at 3 am, add this line:

0 3 * * * /root/backup.sh > /root/backup.log 2>&1

Where the first digits and stars are the schedule, /root/backup.sh is a path to our script, and /root/backup.log 2>&1 is for writing all execution results into a file.

You can find more schedule examples here.